I have often accused the local climate change denial group of shonky science in their treatment of data for their report “Are we feeling warmer yet?“ (see for example New Zealand’s denier-gate). There is the clear issue of their presenting temperature data which had not been corrected for changes in station location, etc. (And of course their unwarranted accusation that our climate scientists were guilty of fraud in using the necessary adjustments). And some of the data they used did not appear to correspond to that on the NIWA database.

Richard Treadgold (the only publicly acknowledged author of the report) consistently refused to clarify any issue I raised with him on the data. He also refused to make his data or methods available. (Although he assured me that his report was backed by his own “science team which wished to remain anonymous”). Read the emails for a summary of his attempts to avoid revealing his data and methods.

However, Treadgold seems to have inadvertently let some of his data slip by providing a link to a spreadsheet (Summary AWFWY RTreadgold.xls which is in a directory for his blog). This provides an opportunity to check in detail the level of scientific competence in this group.

Handling missing data

The spreadsheet is basically a list of temperature anomalies for the 7 stations used in the NIWA material they were critiquing. (The anomalies were presumably calculated by subtracting the average temperature for the period 1971-2000 from the actual temperate for each year. However, we don’t know that for sure unless Treadgold releases all his data and methods). Here is a screen shot for part of the spreadsheet. A notable feature is the large amount of missing data – especially in early times. Even in later years there are data gaps.

When is an average not an average?

Notice how Treadgold has calculated the average anomaly value? Simply by taking the average of the anomalies all 7 stations! Even when some data is missing. Even when he has values for only 1 station!

This completely invalidates Treadgold’s analysis.

How did NIWA handle the problem of missing values? In their original presentation of this data (the one attacked by Treadgold in his report) they did not make this mistake. Instead they used a “7-station composite” – effectively a reconstruction based on estimating missing data. In their most recent presentation (see Painted into a corner?) they removed the early data (where a lot of values are missing) because it’s reliability was questionable. They also did not have the problem of missing data in more recent times which Treadgold had.

So how might this influence the conclusions drawn by Treadgold’s report – irrespective of his big (intentional) error in refusing to adjust data for site changes?

The first graph below is the one Treadgold obtained and included in his report.

He concluded“The unadjusted trend is level—statistically insignificant at 0.06°C per century since 1850.”

But that conclusion is shonky. It includes data which are not true average anomalies.

One way of overcoming the problem is to remove the “average” anomaly for all years where there is missing data. I have done this in the figure below.

So, without the faulty data (“averages” calculated incorrectly) we get an unadjusted trend of 0.23 °C per century (0.00 – 0.56 °C per century at the 95% confidence level).

Even when Treadgold refuses to apply the necessary adjustments he can get his required conclusion only by including data which were not calculated correctly. Data he should have removed.

In his report Treadgold claimed that NZ scientists “created a warming effect where none existed.” That “the shocking truth is that the oldest readings were cranked way down and later readings artificially lifted to give a false impression of warming.” And “we have discovered that the warming in New Zealand over the past 156 years was indeed man-made, but it had nothing to do with emission of CO2 – it was created by man-made adjustments of the temperature. It’s a disgrace.”

Serious charges – but completely unwarranted. And he supported them with shonky science.

With data comes responsibility

Society has quite reasonably been demanding more openness from science – especially in important areas like climate science. Consequently much of the data scientists use has been freely available for critics to use and check. More will become available in the future.

But this example shows a problem with publicly available data. Political motivated people and organisations can make use of it to justify their own predetermined conclusions. By intentionally or unintentionally (through lack of expertise) distorting the data.

The science community has ways of eliminating, or at least reducing data distortion and subjective conclusions. This is by peer review. Not just the formal publication review but the informal checking of calculations, methods and conclusions, by colleagues.

When scientific data and conclusions are publicly discussed, in the way that NIWA’s data has been, there is surely a moral responsibility on everyo0ne involved to make their data, calculations and methods available for critique. I discussed this in my post Freedom of information an d responsibility.

The fact that Richard Treadgold has refused to do this (and only made this spreadsheet available by accident) fails that moral test.

To borrow a sentence from his infamous report – It’s a disgrace.

Ken, not only are you barking mad but you are barking up NIWA’s tree as well as Richard T’s.

Please refer to NIWA’s methodology for the NZT7 series:-

————————————————————————————

1) 7-Station Composite Anomaly = Average of anomalies at individual sites where there is data for that year (<7 sites before 1913)

2) 7-Station Composite Temperature = 7-Station Anomaly + Average of 7-Station 1971-2000 climatologies

E.g., for 1909 when there are 4 sites, the 7-Station Composite Temp is NOT the average of the Wellington, Nelson, Lincoln and Dunedin values.

3) The climatologies are specific to the "Reference" stations at each location, which are: Auckland Aero (Auckland), East Taratahi AWS (Masterton), Kelburn (Wellington), Hokitika Aero (Hokitika), Nelson Aero (Nelson), Lincoln Broadfield EWS (Lincoln), and Musselburgh EWS (Dunedin).

———————————————————–

Note that for the composite anomaly, there is no attempt to compensate for missing data and the composite is a simple average of whatever data is available..

The composite temperature is treated much differently but again there is no attempt to compensate for missing data before the 4-7 station anomaly average is added to the average of 7-Station 1971-2000 climatologies and even then there is not that much difference to a simple average.

You better get on the phone to Dr David Wratt tomorrow and inform him that the NZT7 is "shonky" and whoever compiled the NZT7 is a "fool" according to your "statistical methods" which consist merely of deleting real data or applying the Hansen method of data invention.. You mention interpolation but is that acceptable across stations? A reference station is for comparison, not for transfer. Within a station history however, interpolation is reasonable but what is the cutoff? 2 years? 3 years? 5 years? But even then, interpolation does not make assumed data any more accurate than a guess.

To compensate for missing data is a an issue that not even NIWA is addressing but if they were, it's a little more involved than your method.

If the NZ geographic area is to be represented then all data is relative to the NZ land mass centre-of-area . If say Masterton was missing data, the only way to compensate is to delete a corresponding value for Hokitika to restore balance in the series, This is assuming that the series is geographically balanced to start with, The NZT7 is unbalanced geographically in 1909, 1912 and 1919. The inclusion of Lincoln should maintain balance about the centre-of-area for when all 7 stations have data, that is if NIWA has got the spatial analysis right (who knows for sure that they have?) because there is a greater area of land in the south.

So you see Ken, compensating for missing data in the case of NZ temperatures is more of a spatial issue than a statistical issue but this does not seem to be a concern to NIWA and its NZT7.

Now I'll leave you to draft your retractions and apologies for quite a list of scurrilous assertions that you've racked up here, It may pay to engage your brain and do a little research before a repeat performance in future because this last effort really displays a lack of acumen and civility.

2) is misleading because where there is 7 stations available, composite temp is just a simple average of the 7 stations actuals. It is only where there is less than 7 e.g. 1919 that the anomaly method is used but in the case of 1919 the difference is only 0.03 C between a simple average of 6 stations and the method in 2).

So now missing station data is an issue?!?!!??!?. The team just spend the last ten years ranting that you are a holocaust denier if you claim missing station data is a problem. So there you go, you deny the Holocaust.

No anon (johnston?), missing station data is not a problem. It’s a fact of life.

Problems arise when incorrect methods are used to accommodate the missing data. Treadgold’s method was naively wrong. It lead him the an incorrect conclusion.

But fundamentally his mistake was not to allow any scientific scrutiny of his data or methods. His mistake was so fundamental that few scientifically trained people could have missed it.

His mistake was so fundamental that few scientifically trained people could have missed it.

Plainly not true. After all, the science advisor for NZC”S”C, Chris de Freitas missed it. I expect you to retract and apologise. Oh, wait. You said “few”. Now I see what it takes to be one of the few. We owe the so few so much.

Yes, Doug, this sort of naive mistake does sort of raise issues about the credibility of scientists who lend support to such groups and their propaganda.

I did ask Treadgold if he had any scientific input or review of his “paper”. He originally said no and then waffled when Vincent Gray admitted he had been asked to check the “paper.” (Vincent acknowledged that he should have seen that their rejection of any need for adjustments was wrong).

Treadgold has several times assured me that he has a “science team” but they “wish to remain anonymous.”

Scientists who support groups like this should not remain passive when these sort of errors are made. They should put their foot down and demand retractions, etc.

Not to do so only endangers their own credibility.

Am I missing something here? According to NIWA’s own original spreadsheet, and their later replacement spreadsheet, they calculated average anomalies in exactly the same manner.

In the original 7SS, the years 1853 and 1854 used only one station anomaly: Dunedin. From 1852 to 1855 only two stations were used: Auckland and Dunedin.

The 11SS did the same.

At the top of their new replacement spreadsheet it is clearly written:

“1) 7-Station Composite Anomaly = Average of anomalies at individual sites where there is data for that year (<7 sites before 1913)" as Richard C has pointed out above.

Are you accusing NIWA of shonky science? Have you written to them to complain?

You claim:

"[NIWA] did not make this mistake. Instead they used a “7-station composite” – effectively a reconstruction based on estimating missing data."

What evidence do you have for this? The spreadsheets I have seen so far do not have infilled data.

I suspect you have misunderstood the 7-Stn Composite Temp field. NIWA calculates that by taking the anomalies they have (eg: four stations for 1909) and calculating the Temperature (not anomaly) by adding the averaged anomaly to the 1971-2000 average. However, it is the Anomaly value that is used in their plot.

At the top of NIWA’s 11-station series is the following:

“NB: The 11-site average is the average over whatever sites were not missing for the year in question”

At the top of the 7SS series is this:

“NB: 7-Stn Composite Anomaly = Average of anomalies at individual sites where there is data for that year (<7 sites before 1908)"

A quick check of the data in the spreadsheet confirms this: Eg: 1860 Auckland anomaly is 0.81C, Dunedin -1.15C. Average: -0.17C, which is what is listed under 7-Stn Composite Anomaly for 1860.

Ken, it seems you have made a basic and obvious error in this post. You clearly failed to check anything before you posted (a glance at the actual NIWA spreadsheets would have shown you your mistake), and you’ve said some fairly defamatory things about Richard Treadgold.

You wrote:

“Scientists who support groups like this should not remain passive when these sort of errors are made. They should put their foot down and demand retractions, etc.

Not to do so only endangers their own credibility.”

You should apologise to Richard Treadgold forthwith and retract everything you’ve said in this post.

(Doug Mackie needs to apologise to Chris de Freitas too, for some reason his name was dragged into this.)

“The composite temperature is treated much differently but again there is no attempt to compensate for missing data before the 4-7 station anomaly average is added to the average of 7-Station 1971-2000 climatologies and even then there is not that much difference to a simple average.”

Should read

The composite temperature is treated much differently but again there is no attempt to compensate for missing data before the 4-6 station anomaly average is added to the average of 7-Station 1971-2000 climatologies and even then there is not that much difference to a simple average.

(4-7 changed to 4-6)

BTW, how are those retractions and apologies coming along?

I see Gareth Renowden has also published a piece linking to this post.

He really should have checked the data first. I posted the following, but it disappeared into moderation. Maybe it will reappear soon.

——————————————

Congratulations to Ken for unmasking this fraud. I eagerly await the fulsome apology Treadgold owes to Jim Salinger, NIWA and the public of New Zealand.

I’m afraid it’s actually Ken who must apologise, not only to Richard Treadgold, but also to NIWA and Salinger. It seems he didn’t check – Treadgold actually used NIWA’s methodology. Gareth also didn’t check, and now looks a bit foolish.

The resulting annual anomaly series for individual sites still has missing values in some years. This can include the situation where a site has not yet started (eg, Invercargill, pre-1949), or has closed (eg, Molesworth, 1994).

The ‘eleven’-station average is simply the average of the individual station anomalies over those stations where the annual anomaly is available.

A glance at the spreadsheets for the original 7SS, the 11SS and the new NZ7T series all confirm that NIWA calculates average anomalies using data from available sites where others are missing. Which is exactly what Treadgold has done, following their method.

When NZC”S”C makes science-based claims I think it is reasonable to assume that they have had their science claims checked by their science advisor, Chris de Freitas.

There are several possibilities concerning the involvement of Chris de Freitas.

a) He knew about the errors before they were published.

b) He did not know because he does not have the skills to have noticed.

c) He did not know because he wasn’t asked by anyone to check the analysis, saw nothing odd about the claims made by an amateur, and chose not to look into science claims made by an organisation for which he is science advisor.

Have I missed a possibility?

The least culpable is c, and in that case I expect he will very soon issue a statement to that effect and will ask NZC”S”C to add a note to future press releases etc to let people know what has and has not been checked for science content so that his reputation is not further damaged.

Calm down Manfred and I will take you through this.

1: You have seven sites distributed through the country. the true temperatures at these sites are 10, 12, 15, 9, 16, 18, and 16. The mean temperature would then be 13.33.

But for some reason you only have data for 2 of the sites – say the first 2. Your calculated average would be 11.

Now clearly that data point will be wrong.

You should not include it or somehow estimate the missing data to obtain an estimate of the 7 station average.

2: Now you plot temperature vs time. Most years have temperatures for each station (7) but some have data for less. Some only 1 or 2.

3: In the absence of statistically estimating missing data which gives you the correct plot? A: All the data points including those which are based on only 1, o 2 or less the 7 stations?

B: Omit the “averages where data includes less than 7 stations?

4: Now if you analyse the two sets of data and get different values for the temperature trend which is more correct.? Where the “averages” with missing data are included or when they are excluded?

5: In your judgement forget about how Treadgold and/or NIWA treated the data. Which in your estimate is the correct method?

I have some figures illustrating this using the 7 station data which I can post – but I am trying to get agreement in principle of how to analyse such data.

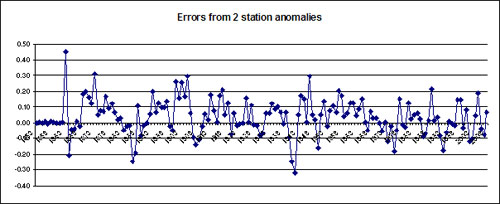

Manfred, here is a plot showing the discrepancy between averages of just the Dunedin and Auckland stations compared with the 7 station average ( or composite values). Y axis is 7 station composite – 2 station “average.”

Now – what do you think you should do about the data points based only on 2 stations if your are presenting the plot as a 7 station average?

“Calm down Manfred…”

I’m actually quite calm. I understand very well what you’re saying, and I’ve looked carefully at the spreadsheets and graphs.

I suspect you are not understanding the concepts behind the calculation of anomalies. I suspect this because in your example above you use temperatures.

You need to go away for a while and read up on anomalies, and why they are used to combine temperature histories from spatially distributed sites. You really shouldn’t be posting inflammatory stuff without understanding the very basic concepts – you end up making a fool of yourself and others.

This is climatology 101, or even pre-requisite. I would expect more from someone who claims to be a scientist.

Manfred – I used temperatures here to simplify the situation. I understand the calculation of anomalies and am purely trying to get your acceptance in principle on what is the correct way to handle “averages” based on missing data.

( I only referred in passing to NIWA’s estimation of “7-station composite” in my post because to have described the detail would have lost people).

Can you please answer my question and we can get to anomalies. (I also have a plot using anomalies which shows essentially similar things to the plot above).

OK Manfred – here is the graph representing the problem using the anomalies for the 7 station data. Again this is the 7 station composite anomalies – 2 station “average” anomalies.

What do you think is the best way to handle data points where only a few stations are used to obtain the “average?”

I see you are still confused. OK, this is how it works:

You can’t just average temperatures from sites across a country. Each temperature average will be different. Some will be cooler, some hotter. So you have to baseline them to a common average first. In the case of NIWA they have chosen 1971-2000, but any common period will do. The resultant value is an anomaly.

For each station, if you have annual values, you calculate the average annual value for all the years in your baseline period. Then you subtract this average baseline value from each annual temperature. You end up with an anomaly for that year for that site. Of course, if you’re using monthly or even daily data, then you must calculate monthly or daily anomalies, but you get the point.

You can’t combine temperatures (your example is nonsensical), but you can combine anomalies, since they’re baselined. Is it best practice? Of course not, ideally one wants all 7 station data. But if you’ve only got two stations, and you insist (like NIWA) on using just two stations, then by combining anomalies instead of temperatures you don’t get the problem you’re making such a big deal about above.

Clearly, the NZCSC understood this, and you didn’t.

Remember, NIWA’s 7SS used only one station, then two, four etc. Any criticism of Treadgold’s method is a criticism of NIWA’s.

Again, Manfred, you seem to be avoid my question on the correct way of handling averages when data is missing. That is the issue.

I understand completely about anomalies and you appear to be attempting diversion away from the principle by a pointless pontification on these. To remove that obstacle to your response my last figure displayed anomalies.

Do you think one should include “average” anomalies based only on say 2 stations in a plot representing 7 stations. And is it valid to calculate trends when there are a lot of data points “averaging” only 2 stations instead of 7?

How do you advise handling this situation?

You appear to be saying “ideally one wants all 7 station data. ” But what if you haven’t? Should you include the poor data or calculate trends by included 2 station averages? Should this be done when there are long periods of missing station data? For example the 60 years shown in the plots of my post?

Manfred, I think you need to do some more reading. I think you are getting confused between the 7SS and the 11SS. The original NZTR, the one that Treadgold was complaining about, is a fully composite series – that is, it does not have any missing annual values. The 11SS was produced later, using only sites with a continuous series (i.e. no adjustments of the sort that get RT all huffy). The 11SS does therefore have missing annual values, but it does always have at least 8 station values for most of its length. This is quite different from RT’s so-called composite, which for large chunks is the average of only 2 or 3 stations.

The original spreadsheet for the 7SS (not the latest one) ran from 1853, and looked almost exactly like the NZCSC one, except for unadjusted values instead of Salinger’s.

Manfred – you are not answering the basic question.

“Remember, NIWA’s 7SS used only one station, then two, four etc. Any criticism of Treadgold’s method is a criticism of NIWA’s.” This is beside the point. Before you can judge either of those people or institutions wrong you need to be clear about the principle.

Is it appropriate to include such large stretches of “averages” which refer to only a few, or even one, station?

“Do you think one should include “average” anomalies based only on say 2 stations in a plot representing 7 stations.”

No, but NIWA did. Therefore to compare with NIWA, it was necessary to copy what they did.

“And is it valid to calculate trends when there are a lot of data points “averaging” only 2 stations instead of 7?”

If NIWA chose to include this data then it is valid, when comparing unadjusted versus adjusted data.

Look at the 11SS. They said “Note that not all stations have annual mean temperature values for all years in 1931–2008.” And yet they calculated the trend using all the data. Are you going to post a criticism of the 11SS?

Generally one uses all the data for the trend. If you want to drop data for legitimate reasons, do so before you plot the graph or calculate the trend.

NIWA chose to use all the data in their graph, but then they chose to highlight only the 1908-2008 trend to confirm their “last 100 years” claim of 0.9C/century. This was inadvisable, since a glance at their graph shows the data declining from 1853 to the lowest point about 1908, which immediately opens them to criticisms of cherry-picking partial trends. Had they correctly plotted the full trend, it would have been less than 0.9C/century.

I see they have corrected this now with their new graph, which only runs from 1909. But the new graph did not exist at the time Treadgold’s graph was published.

In all, I see nothing wrong with the approach adopted by Treadgold, which was to follow NIWA’s lead in order to determine the adjustments.

Face it Ken, you stuffed up. Your point wasn’t about the minutiae of trend analysis. Your point was that you thought NIWA had infilled data.

From above:

“Notice how Treadgold has calculated the average anomaly value? Simply by taking the average of the anomalies all 7 stations! Even when some data is missing. Even when he has values for only 1 station!

This completely invalidates Treadgold’s analysis.

How did NIWA handle the problem of missing values? In their original presentation of this data (the one attacked by Treadgold in his report) they did not make this mistake. Instead they used a “7-station composite” – effectively a reconstruction based on estimating missing data.”

Yet NIWA did exactly that. They took the average even when they had the value for only one station. They did it for all their graphs. They didn’t infill. You misunderstood the 7-station composite temperature completely. I know, you know it.

Manfred – you say ““Do you think one should include “average” anomalies based only on say 2 stations in a plot representing 7 stations.”

No, but NIWA did.”

Well that’s an admission, isn’t it? You believe this procedure was wrong. (adding “but NIWA did it doesn’t make it right, does it?).

So you accept my argument that it is wrong to include all the early data where only a few stations contribute. That any derivation of trends may be faulty.

If NIWA did this then they are just as wrong. Although, in fairness they calculated averages of anomalies to use as a basis for then calculating their composite temperatures. As they say, where less than 7 station data was available they calculated a composite temperature using the anomalies of the available data. Where all 7 were available their composite temperature was a simple average.

(And, no Manfred, my point wasn’t that you I “thought NIWA had infilled data.” Not at all – I was familiar with the spreadsheet. And effectively they were trying to compensate for missing data by using the Average temperatures from 1971-2000).

It’s not clear from the spreadsheet I have what they then used to obtain data for their plot. (Unlike Treadgold’s spreadsheet it doesn’t include the graph). However, calculating an anomaly from the composite temperature in the normal way would be faulty because it just gives you your original data. (There would be no point in calculating a composite temperature). If they did use that procedure then they were wrong – although I can appreciate that it is one way of handling isolat6ed missing data. A detailed statistical procedure would be better.

However, we now have this data re-examined, and new adjustments worked out independently. In the process the decision was made not to use the early data which was described as unreliable. One reason for this would have been the huge missing blocks of data. A wise decision, I think.

And lets not forget that after this independent analysis the resulting plots was extremely similar to the original one for that same time period.

Which rather exposed Treadgold’s malicious claims, didn’t it?

And Manfred, in all this you have had access to NIWA’s data. I have been asking Treadgold for a year to provide me his data and methods. I only have his spreadsheet now because he mistakenly revealed it in a directory on his blog.

“And Manfred, in all this you have had access to NIWA’s data.”

You mean via CliFlo? The same way you do? Or do you mean the 7SS that was published by NIWA online for anybody to download?

“And lets not forget that after this independent analysis the resulting plots was extremely similar to the original one for that same time period.

Which rather exposed Treadgold’s malicious claims, didn’t it?”

It wasn’t an independent analysis. Who performed it? NIWA! Who reviewed it? The BoM! Who said they simply read the report but didn’t look at the data? The BoM!

“And effectively they were trying to compensate for missing data by using the Average temperatures from 1971-2000).”

Still don’t get it, do you?

“However, calculating an anomaly from the composite temperature in the normal way would be faulty because it just gives you your original data. (There would be no point in calculating a composite temperature). If they did use that procedure then they were wrong “

Oh for heaven’s sake! I give up, you still don’t understand the basics!

Manfred – I was contrasting the normal availability of data from institutions like NIWA with the denial of access to data and information by Treadgold.

It was an independent analysis. Salinger no longer worked for NIWA. The analysis was done by different people starting from scratch. It appears that you don’t consider honest scientific work as independent.

Actually, Manfred, I don’t think you get it. Could you detail your understanding of how NIWA calculated “composite temperatures” in the original spreadsheet? It’s not completely straightforward but I managed it. And calculation of “average” anomalies for less than 7 station data was involved.

I have worked it out, Manfred. And I think you agree. Using average anomalies for data with missing stations over long blocks of time is shonky. Fortunately that question no longer arises with the NIWA graph as the missing data is relatively isolated.

However, I would still prefer a statistical procedure to handle any missing data – especially as it can be done as part of calculating trends.

It wasn’t an independent analysis. Who performed it? NIWA! Who reviewed it? The BoM! Who said they simply read the report but didn’t look at the data? The BoM!

Manfred, what exactly are you saying here? please clarify any inferences you believe might be drawn from your observation above.

Manfred – my bad. I was looking at the revised 7SS, not the original. You are correct about the earlier data.

However, the report says that the earlier data were removed for precisely this reason, though – that having fewer data points makes the averages less reliable.

I am certainly not shouting – if anything this thread has gone very quiet since my last reply to Manfred. (And quite frankly I expected more response. Treadgold has stayed away for example).

He seems to have accepted that it was wrong to include such faulty data.

In any case calculating a simple average of anomalies is going to introduce a lot of variation when there a few stations. This would have the effect of masking any trend.

It seems that NIWA’s original strategy (for using earlier data) would have reduced the variation by dividing the sum by 7 (i.e. assuming that the anomalies were zero for the stations with no data).

Pingback: Tweets that mention Shonky climate-change denial “science” | Open Parachute -- Topsy.com

Good analysis Ken, I suppose we can see why he was not keen to operate with the same level of transparency as what he demands of others.

LikeLike

Pingback: easy science fair projects: Soda Bottle Science: 25 Easy, Hands-on Activities That Teach Key Concepts in Physical, Earth, and Life Sciences-and Meet the Science Standards « Science Fair Project Ideas

Pingback: Fools gold: cranks can’t count

Well done Ken. Persistence pays!

LikeLike

Ken, not only are you barking mad but you are barking up NIWA’s tree as well as Richard T’s.

Please refer to NIWA’s methodology for the NZT7 series:-

————————————————————————————

1) 7-Station Composite Anomaly = Average of anomalies at individual sites where there is data for that year (<7 sites before 1913)

2) 7-Station Composite Temperature = 7-Station Anomaly + Average of 7-Station 1971-2000 climatologies

E.g., for 1909 when there are 4 sites, the 7-Station Composite Temp is NOT the average of the Wellington, Nelson, Lincoln and Dunedin values.

3) The climatologies are specific to the "Reference" stations at each location, which are: Auckland Aero (Auckland), East Taratahi AWS (Masterton), Kelburn (Wellington), Hokitika Aero (Hokitika), Nelson Aero (Nelson), Lincoln Broadfield EWS (Lincoln), and Musselburgh EWS (Dunedin).

———————————————————–

Note that for the composite anomaly, there is no attempt to compensate for missing data and the composite is a simple average of whatever data is available..

The composite temperature is treated much differently but again there is no attempt to compensate for missing data before the 4-7 station anomaly average is added to the average of 7-Station 1971-2000 climatologies and even then there is not that much difference to a simple average.

You better get on the phone to Dr David Wratt tomorrow and inform him that the NZT7 is "shonky" and whoever compiled the NZT7 is a "fool" according to your "statistical methods" which consist merely of deleting real data or applying the Hansen method of data invention.. You mention interpolation but is that acceptable across stations? A reference station is for comparison, not for transfer. Within a station history however, interpolation is reasonable but what is the cutoff? 2 years? 3 years? 5 years? But even then, interpolation does not make assumed data any more accurate than a guess.

To compensate for missing data is a an issue that not even NIWA is addressing but if they were, it's a little more involved than your method.

If the NZ geographic area is to be represented then all data is relative to the NZ land mass centre-of-area . If say Masterton was missing data, the only way to compensate is to delete a corresponding value for Hokitika to restore balance in the series, This is assuming that the series is geographically balanced to start with, The NZT7 is unbalanced geographically in 1909, 1912 and 1919. The inclusion of Lincoln should maintain balance about the centre-of-area for when all 7 stations have data, that is if NIWA has got the spatial analysis right (who knows for sure that they have?) because there is a greater area of land in the south.

So you see Ken, compensating for missing data in the case of NZ temperatures is more of a spatial issue than a statistical issue but this does not seem to be a concern to NIWA and its NZT7.

Now I'll leave you to draft your retractions and apologies for quite a list of scurrilous assertions that you've racked up here, It may pay to engage your brain and do a little research before a repeat performance in future because this last effort really displays a lack of acumen and civility.

LikeLike

Richard Cummings – did you read what you wrote?

You are directly contradicting yourself.

LikeLike

2) is misleading because where there is 7 stations available, composite temp is just a simple average of the 7 stations actuals. It is only where there is less than 7 e.g. 1919 that the anomaly method is used but in the case of 1919 the difference is only 0.03 C between a simple average of 6 stations and the method in 2).

LikeLike

So now missing station data is an issue?!?!!??!?. The team just spend the last ten years ranting that you are a holocaust denier if you claim missing station data is a problem. So there you go, you deny the Holocaust.

LikeLike

No anon (johnston?), missing station data is not a problem. It’s a fact of life.

Problems arise when incorrect methods are used to accommodate the missing data. Treadgold’s method was naively wrong. It lead him the an incorrect conclusion.

But fundamentally his mistake was not to allow any scientific scrutiny of his data or methods. His mistake was so fundamental that few scientifically trained people could have missed it.

LikeLike

Until Treadgold releases his data sets and methodology he ought to be treated as a crank and any of his claims dismissed. Simple really.

LikeLike

Ken,

Plainly not true. After all, the science advisor for NZC”S”C, Chris de Freitas missed it. I expect you to retract and apologise. Oh, wait. You said “few”. Now I see what it takes to be one of the few. We owe the so few so much.

LikeLike

Yes, Doug, this sort of naive mistake does sort of raise issues about the credibility of scientists who lend support to such groups and their propaganda.

I did ask Treadgold if he had any scientific input or review of his “paper”. He originally said no and then waffled when Vincent Gray admitted he had been asked to check the “paper.” (Vincent acknowledged that he should have seen that their rejection of any need for adjustments was wrong).

Treadgold has several times assured me that he has a “science team” but they “wish to remain anonymous.”

Scientists who support groups like this should not remain passive when these sort of errors are made. They should put their foot down and demand retractions, etc.

Not to do so only endangers their own credibility.

LikeLike

Am I missing something here? According to NIWA’s own original spreadsheet, and their later replacement spreadsheet, they calculated average anomalies in exactly the same manner.

In the original 7SS, the years 1853 and 1854 used only one station anomaly: Dunedin. From 1852 to 1855 only two stations were used: Auckland and Dunedin.

The 11SS did the same.

At the top of their new replacement spreadsheet it is clearly written:

“1) 7-Station Composite Anomaly = Average of anomalies at individual sites where there is data for that year (<7 sites before 1913)" as Richard C has pointed out above.

Are you accusing NIWA of shonky science? Have you written to them to complain?

You claim:

"[NIWA] did not make this mistake. Instead they used a “7-station composite” – effectively a reconstruction based on estimating missing data."

What evidence do you have for this? The spreadsheets I have seen so far do not have infilled data.

I suspect you have misunderstood the 7-Stn Composite Temp field. NIWA calculates that by taking the anomalies they have (eg: four stations for 1909) and calculating the Temperature (not anomaly) by adding the averaged anomaly to the 1971-2000 average. However, it is the Anomaly value that is used in their plot.

LikeLike

At the top of NIWA’s 11-station series is the following:

“NB: The 11-site average is the average over whatever sites were not missing for the year in question”

LikeLike

At the top of the 7SS series is this:

“NB: 7-Stn Composite Anomaly = Average of anomalies at individual sites where there is data for that year (<7 sites before 1908)"

A quick check of the data in the spreadsheet confirms this:

Eg: 1860 Auckland anomaly is 0.81C, Dunedin -1.15C. Average: -0.17C, which is what is listed under 7-Stn Composite Anomaly for 1860.

Surely this is not hard to comprehend?

LikeLike

Ken, it seems you have made a basic and obvious error in this post. You clearly failed to check anything before you posted (a glance at the actual NIWA spreadsheets would have shown you your mistake), and you’ve said some fairly defamatory things about Richard Treadgold.

You wrote:

You should apologise to Richard Treadgold forthwith and retract everything you’ve said in this post.

(Doug Mackie needs to apologise to Chris de Freitas too, for some reason his name was dragged into this.)

LikeLike

Correction

“The composite temperature is treated much differently but again there is no attempt to compensate for missing data before the 4-7 station anomaly average is added to the average of 7-Station 1971-2000 climatologies and even then there is not that much difference to a simple average.”

Should read

The composite temperature is treated much differently but again there is no attempt to compensate for missing data before the 4-6 station anomaly average is added to the average of 7-Station 1971-2000 climatologies and even then there is not that much difference to a simple average.

(4-7 changed to 4-6)

BTW, how are those retractions and apologies coming along?

LikeLike

I see Gareth Renowden has also published a piece linking to this post.

He really should have checked the data first. I posted the following, but it disappeared into moderation. Maybe it will reappear soon.

——————————————

I’m afraid it’s actually Ken who must apologise, not only to Richard Treadgold, but also to NIWA and Salinger. It seems he didn’t check – Treadgold actually used NIWA’s methodology. Gareth also didn’t check, and now looks a bit foolish.

A quick glance here:

http://www.niwa.co.nz/news-and-publications/news/all/2009/nz-temp-record/temperature-trends-from-raw-data/technical-note-on-the-treatment-of-missing-data

will show you that both you and Ken have blundered badly with your posts:

A glance at the spreadsheets for the original 7SS, the 11SS and the new NZ7T series all confirm that NIWA calculates average anomalies using data from available sites where others are missing. Which is exactly what Treadgold has done, following their method.

Apologies are in order, I suspect.

LikeLike

When NZC”S”C makes science-based claims I think it is reasonable to assume that they have had their science claims checked by their science advisor, Chris de Freitas.

There are several possibilities concerning the involvement of Chris de Freitas.

a) He knew about the errors before they were published.

b) He did not know because he does not have the skills to have noticed.

c) He did not know because he wasn’t asked by anyone to check the analysis, saw nothing odd about the claims made by an amateur, and chose not to look into science claims made by an organisation for which he is science advisor.

Have I missed a possibility?

The least culpable is c, and in that case I expect he will very soon issue a statement to that effect and will ask NZC”S”C to add a note to future press releases etc to let people know what has and has not been checked for science content so that his reputation is not further damaged.

LikeLike

“Have I missed a possibility?”

Yes, a rather obvious one. There is no error. Read above.

LikeLike

Calm down Manfred and I will take you through this.

1: You have seven sites distributed through the country. the true temperatures at these sites are 10, 12, 15, 9, 16, 18, and 16. The mean temperature would then be 13.33.

But for some reason you only have data for 2 of the sites – say the first 2. Your calculated average would be 11.

Now clearly that data point will be wrong.

You should not include it or somehow estimate the missing data to obtain an estimate of the 7 station average.

2: Now you plot temperature vs time. Most years have temperatures for each station (7) but some have data for less. Some only 1 or 2.

3: In the absence of statistically estimating missing data which gives you the correct plot?

A: All the data points including those which are based on only 1, o 2 or less the 7 stations?

B: Omit the “averages where data includes less than 7 stations?

4: Now if you analyse the two sets of data and get different values for the temperature trend which is more correct.? Where the “averages” with missing data are included or when they are excluded?

5: In your judgement forget about how Treadgold and/or NIWA treated the data. Which in your estimate is the correct method?

I have some figures illustrating this using the 7 station data which I can post – but I am trying to get agreement in principle of how to analyse such data.

LikeLike

Manfred, here is a plot showing the discrepancy between averages of just the Dunedin and Auckland stations compared with the 7 station average ( or composite values). Y axis is 7 station composite – 2 station “average.”

Now – what do you think you should do about the data points based only on 2 stations if your are presenting the plot as a 7 station average?

LikeLike

“Calm down Manfred…”

I’m actually quite calm. I understand very well what you’re saying, and I’ve looked carefully at the spreadsheets and graphs.

I suspect you are not understanding the concepts behind the calculation of anomalies. I suspect this because in your example above you use temperatures.

You need to go away for a while and read up on anomalies, and why they are used to combine temperature histories from spatially distributed sites. You really shouldn’t be posting inflammatory stuff without understanding the very basic concepts – you end up making a fool of yourself and others.

This is climatology 101, or even pre-requisite. I would expect more from someone who claims to be a scientist.

LikeLike

Manfred – I used temperatures here to simplify the situation. I understand the calculation of anomalies and am purely trying to get your acceptance in principle on what is the correct way to handle “averages” based on missing data.

( I only referred in passing to NIWA’s estimation of “7-station composite” in my post because to have described the detail would have lost people).

Can you please answer my question and we can get to anomalies. (I also have a plot using anomalies which shows essentially similar things to the plot above).

LikeLike

OK Manfred – here is the graph representing the problem using the anomalies for the 7 station data. Again this is the 7 station composite anomalies – 2 station “average” anomalies.

What do you think is the best way to handle data points where only a few stations are used to obtain the “average?”

LikeLike

I see you are still confused. OK, this is how it works:

You can’t just average temperatures from sites across a country. Each temperature average will be different. Some will be cooler, some hotter. So you have to baseline them to a common average first. In the case of NIWA they have chosen 1971-2000, but any common period will do. The resultant value is an anomaly.

For each station, if you have annual values, you calculate the average annual value for all the years in your baseline period. Then you subtract this average baseline value from each annual temperature. You end up with an anomaly for that year for that site. Of course, if you’re using monthly or even daily data, then you must calculate monthly or daily anomalies, but you get the point.

You can’t combine temperatures (your example is nonsensical), but you can combine anomalies, since they’re baselined. Is it best practice? Of course not, ideally one wants all 7 station data. But if you’ve only got two stations, and you insist (like NIWA) on using just two stations, then by combining anomalies instead of temperatures you don’t get the problem you’re making such a big deal about above.

Clearly, the NZCSC understood this, and you didn’t.

Remember, NIWA’s 7SS used only one station, then two, four etc. Any criticism of Treadgold’s method is a criticism of NIWA’s.

LikeLike

My apologies, when I wrote “Is it best practice?” I meant “Is it best practice to use only one station?”

LikeLike

Again, Manfred, you seem to be avoid my question on the correct way of handling averages when data is missing. That is the issue.

I understand completely about anomalies and you appear to be attempting diversion away from the principle by a pointless pontification on these. To remove that obstacle to your response my last figure displayed anomalies.

Do you think one should include “average” anomalies based only on say 2 stations in a plot representing 7 stations. And is it valid to calculate trends when there are a lot of data points “averaging” only 2 stations instead of 7?

How do you advise handling this situation?

You appear to be saying “ideally one wants all 7 station data. ” But what if you haven’t? Should you include the poor data or calculate trends by included 2 station averages? Should this be done when there are long periods of missing station data? For example the 60 years shown in the plots of my post?

Missing data is a common issue.

LikeLike

Manfred, I think you need to do some more reading. I think you are getting confused between the 7SS and the 11SS. The original NZTR, the one that Treadgold was complaining about, is a fully composite series – that is, it does not have any missing annual values. The 11SS was produced later, using only sites with a continuous series (i.e. no adjustments of the sort that get RT all huffy). The 11SS does therefore have missing annual values, but it does always have at least 8 station values for most of its length. This is quite different from RT’s so-called composite, which for large chunks is the average of only 2 or 3 stations.

LikeLike

CTG,

The original spreadsheet for the 7SS (not the latest one) ran from 1853, and looked almost exactly like the NZCSC one, except for unadjusted values instead of Salinger’s.

The 11SS also had missing values, as you state.

LikeLike

Manfred – you are not answering the basic question.

“Remember, NIWA’s 7SS used only one station, then two, four etc. Any criticism of Treadgold’s method is a criticism of NIWA’s.” This is beside the point. Before you can judge either of those people or institutions wrong you need to be clear about the principle.

Is it appropriate to include such large stretches of “averages” which refer to only a few, or even one, station?

LikeLike

“Do you think one should include “average” anomalies based only on say 2 stations in a plot representing 7 stations.”

No, but NIWA did. Therefore to compare with NIWA, it was necessary to copy what they did.

“And is it valid to calculate trends when there are a lot of data points “averaging” only 2 stations instead of 7?”

If NIWA chose to include this data then it is valid, when comparing unadjusted versus adjusted data.

Look at the 11SS. They said “Note that not all stations have annual mean temperature values for all years in 1931–2008.” And yet they calculated the trend using all the data. Are you going to post a criticism of the 11SS?

Generally one uses all the data for the trend. If you want to drop data for legitimate reasons, do so before you plot the graph or calculate the trend.

NIWA chose to use all the data in their graph, but then they chose to highlight only the 1908-2008 trend to confirm their “last 100 years” claim of 0.9C/century. This was inadvisable, since a glance at their graph shows the data declining from 1853 to the lowest point about 1908, which immediately opens them to criticisms of cherry-picking partial trends. Had they correctly plotted the full trend, it would have been less than 0.9C/century.

I see they have corrected this now with their new graph, which only runs from 1909. But the new graph did not exist at the time Treadgold’s graph was published.

In all, I see nothing wrong with the approach adopted by Treadgold, which was to follow NIWA’s lead in order to determine the adjustments.

LikeLike

But all this is diverting from the obvious.

Face it Ken, you stuffed up. Your point wasn’t about the minutiae of trend analysis. Your point was that you thought NIWA had infilled data.

From above:

“Notice how Treadgold has calculated the average anomaly value? Simply by taking the average of the anomalies all 7 stations! Even when some data is missing. Even when he has values for only 1 station!

This completely invalidates Treadgold’s analysis.

How did NIWA handle the problem of missing values? In their original presentation of this data (the one attacked by Treadgold in his report) they did not make this mistake. Instead they used a “7-station composite” – effectively a reconstruction based on estimating missing data.”

Yet NIWA did exactly that. They took the average even when they had the value for only one station. They did it for all their graphs. They didn’t infill. You misunderstood the 7-station composite temperature completely. I know, you know it.

LikeLike

Manfred – you say ““Do you think one should include “average” anomalies based only on say 2 stations in a plot representing 7 stations.”

No, but NIWA did.”

Well that’s an admission, isn’t it? You believe this procedure was wrong. (adding “but NIWA did it doesn’t make it right, does it?).

So you accept my argument that it is wrong to include all the early data where only a few stations contribute. That any derivation of trends may be faulty.

If NIWA did this then they are just as wrong. Although, in fairness they calculated averages of anomalies to use as a basis for then calculating their composite temperatures. As they say, where less than 7 station data was available they calculated a composite temperature using the anomalies of the available data. Where all 7 were available their composite temperature was a simple average.

(And, no Manfred, my point wasn’t that you I “thought NIWA had infilled data.” Not at all – I was familiar with the spreadsheet. And effectively they were trying to compensate for missing data by using the Average temperatures from 1971-2000).

It’s not clear from the spreadsheet I have what they then used to obtain data for their plot. (Unlike Treadgold’s spreadsheet it doesn’t include the graph). However, calculating an anomaly from the composite temperature in the normal way would be faulty because it just gives you your original data. (There would be no point in calculating a composite temperature). If they did use that procedure then they were wrong – although I can appreciate that it is one way of handling isolat6ed missing data. A detailed statistical procedure would be better.

However, we now have this data re-examined, and new adjustments worked out independently. In the process the decision was made not to use the early data which was described as unreliable. One reason for this would have been the huge missing blocks of data. A wise decision, I think.

And lets not forget that after this independent analysis the resulting plots was extremely similar to the original one for that same time period.

Which rather exposed Treadgold’s malicious claims, didn’t it?

And Manfred, in all this you have had access to NIWA’s data. I have been asking Treadgold for a year to provide me his data and methods. I only have his spreadsheet now because he mistakenly revealed it in a directory on his blog.

LikeLike

You mean via CliFlo? The same way you do? Or do you mean the 7SS that was published by NIWA online for anybody to download?

It wasn’t an independent analysis. Who performed it? NIWA! Who reviewed it? The BoM! Who said they simply read the report but didn’t look at the data? The BoM!

Still don’t get it, do you?

Oh for heaven’s sake! I give up, you still don’t understand the basics!

I’ve had my say. You try to work it out.

LikeLike

Manfred – I was contrasting the normal availability of data from institutions like NIWA with the denial of access to data and information by Treadgold.

It was an independent analysis. Salinger no longer worked for NIWA. The analysis was done by different people starting from scratch. It appears that you don’t consider honest scientific work as independent.

Actually, Manfred, I don’t think you get it. Could you detail your understanding of how NIWA calculated “composite temperatures” in the original spreadsheet? It’s not completely straightforward but I managed it. And calculation of “average” anomalies for less than 7 station data was involved.

I have worked it out, Manfred. And I think you agree. Using average anomalies for data with missing stations over long blocks of time is shonky. Fortunately that question no longer arises with the NIWA graph as the missing data is relatively isolated.

However, I would still prefer a statistical procedure to handle any missing data – especially as it can be done as part of calculating trends.

LikeLike

It wasn’t an independent analysis. Who performed it? NIWA! Who reviewed it? The BoM! Who said they simply read the report but didn’t look at the data? The BoM!

Manfred, what exactly are you saying here? please clarify any inferences you believe might be drawn from your observation above.

LikeLike

Manfred – my bad. I was looking at the revised 7SS, not the original. You are correct about the earlier data.

However, the report says that the earlier data were removed for precisely this reason, though – that having fewer data points makes the averages less reliable.

LikeLike

Calm down Ken. No need to shout. We realise this must be very very embarrassing to be shown up so badly.

LikeLike

David Parker – what are you on about?

I am certainly not shouting – if anything this thread has gone very quiet since my last reply to Manfred. (And quite frankly I expected more response. Treadgold has stayed away for example).

He seems to have accepted that it was wrong to include such faulty data.

So what’s your beef?

LikeLike

In any case calculating a simple average of anomalies is going to introduce a lot of variation when there a few stations. This would have the effect of masking any trend.

It seems that NIWA’s original strategy (for using earlier data) would have reduced the variation by dividing the sum by 7 (i.e. assuming that the anomalies were zero for the stations with no data).

LikeLike